Section: New Results

Lighting and Rendering

Participants : Mahdi Bagher, Laurent Belcour, Georges-Pierre Bonneau, Eric Bruneton, Cyril Crassin, Jean-Dominique Gascuel, Olivier Hoel, Nicolas Holzschuch, Fabrice Neyret, Cyril Soler, Fabrice Neyret, Charles de Rousiers, Cyril Soler.

Non-linear Pre-filtering Methods for Efficient and Accurate Surface Shading

Participants : Eric Bruneton, Fabrice Neyret.

Rendering a complex surface accurately and without aliasing requires the evaluation of an integral for each pixel, namely a weighted average of the outgoing radiance over the pixel footprint on the surface. The outgoing radiance is itself given by a local illumination equation as a function of the incident radiance and of the surface properties. Computing all this numerically during rendering can be extremely costly. For efficiency, especially for real-time rendering, it is necessary to use precomputations. When the fine scale surface geometry, reflectance and illumination properties are specified with maps on a coarse mesh (such as color maps, normal maps, horizon maps or shadow maps), a frequently used simple idea is to pre-filter each map linearly and separately. The averaged outgoing radiance, i.e., the average of the values given by the local illumination equation is then estimated by applying this equation to the averaged surface parameters. But this is really not accurate because this equation is non-linear, due to self-occlusions, self-shadowing, non-linear reflectance functions, etc. Some methods use more complex pre-filtering algorithms to cope with these non-linear effects. In [14] we presented a a survey of these methods. We have started with a general presentation of the problem of pre-filtering complex surfaces. We then present and classify the existing methods according to the approximations they make to tackle this difficult problem. Finally, an analysis of these methods allows us to highlight some generic tools to pre-filter maps used in non-linear functions, and to identify open issues to address the general problem.

Frequency-Based Kernel Estimation for Progressive Photon Mapping

Participants : Laurent Belcour, Cyril Soler.

|

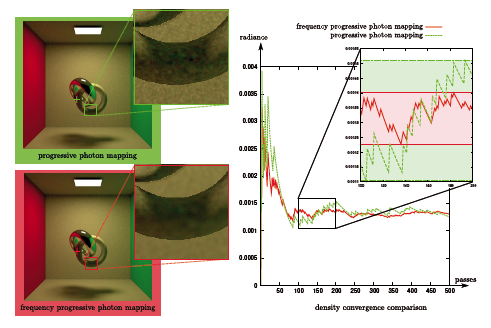

We have developed an extension to Hachisuka et al.'s Progressive Photon Mapping (or PPM) algorithm [32] in which we estimate the radius of the density estimation kernels using frequency analysis of light transport [29] . We predict the local radiance frequency at the surface of objects using a Gaussian approximation, and use it to drive the size of the density estimation kernels, in order to accelerate convergence (see Figure 3 . The key is to add frequency information to a small proportion of photons: frequency photons. In addition to contributing to the density estimation, they will provide frequency information. This work has been published in [20] .

Efficiently Visualizing Massive Tetrahedral Meshes with Topology Preservation

Participant : Georges-Pierre Bonneau.

|

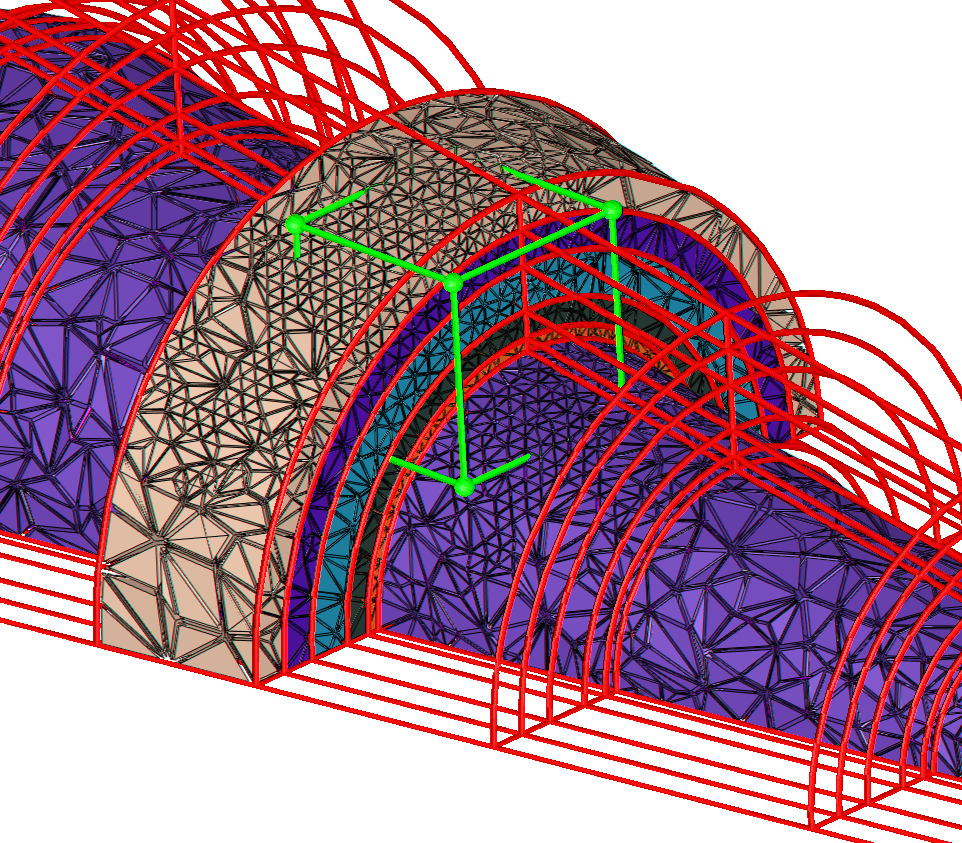

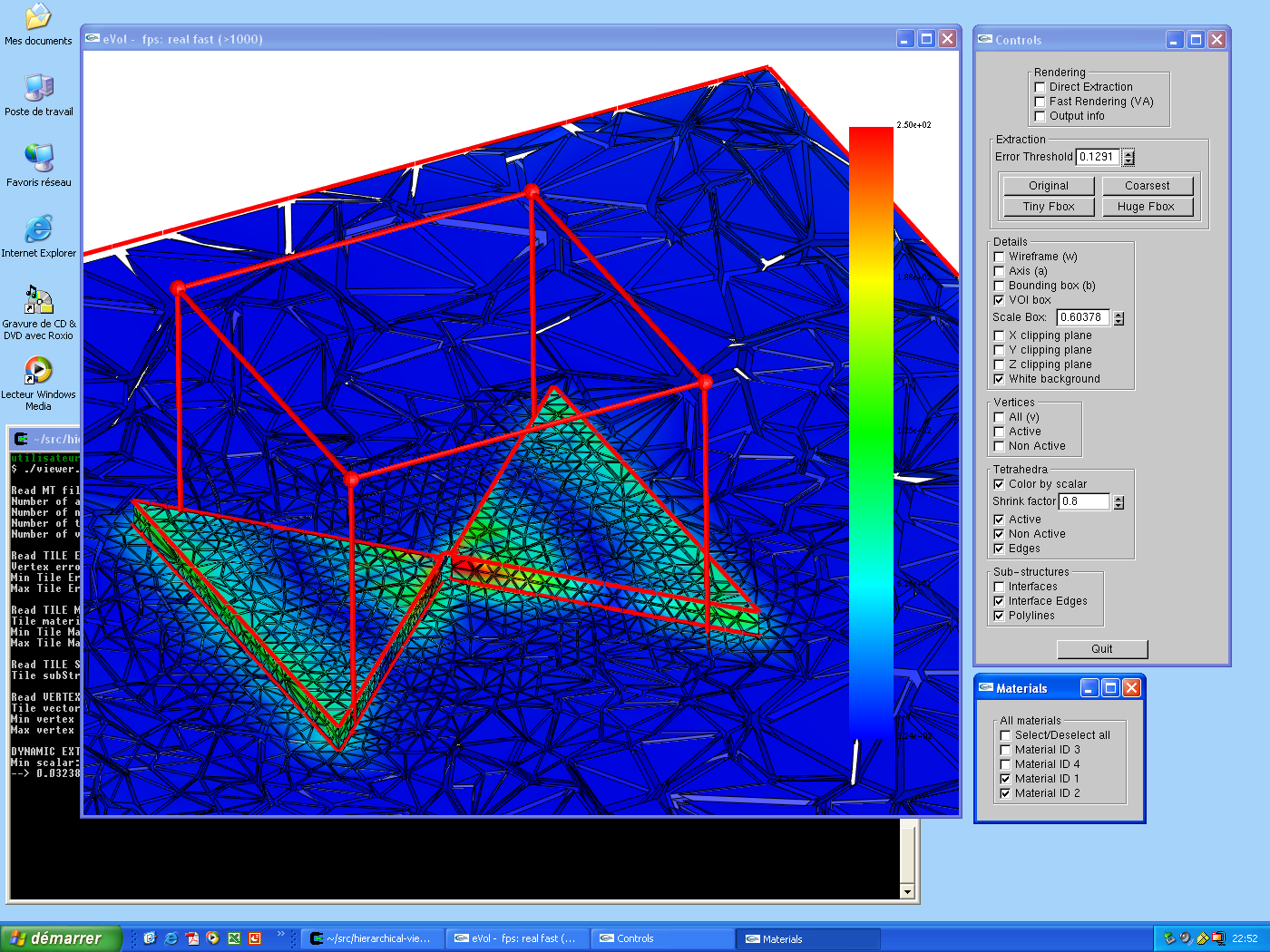

This work is the result of a collaboration with S. Hahmann from the EVASION team-project and Prof. Hans Hagen partly done during a sabbatical of G.-P. Bonneau in the University of Kaiserslautern, Germany. Interdisciplinary efforts in modeling and simulating phenomena have led to complex multi-physics models involving different physical properties and materials in the same system. Within a 3d domain, substructures of lower dimensions appear at the interface between different materials. Correspondingly, an unstructured tetrahedral mesh used for such a simulation includes 2d and 1d substructures embedded in the vertices, edges and faces of the mesh. The simplification of such tetrahedral meshes must preserve (1) the geometry and the topology of the 3d domain, (2) the simulated data and (3) the geometry and topology of the embedded substructures. This work focuses on the preservation of the topology of 1d and 2d substructures embedded in an unstructured tetrahedral mesh, during edge collapse simplification. We derive a robust algorithm, based on combinatorial topology results, in order to determine if an edge can be collapsed without changing the topology of both the mesh and all embedded substructures. Based on this algorithm we have developed a system for simplifying scientific datasets defined on irregular tetrahedral meshes with substructures, illustrated in Figure 4 . We presented and demonstrated the power of our system with real world scientific datasets from electromagnetism simulations in the Springer book chapter [27] .

Real-Time Rough Refraction

Participants : Nicolas Holzschuch, Charles de Rousiers.

|

We have developed an algorithm to render objects of transparent materials with rough surfaces in real-time, under distant illumination. Rough surfaces cause wide scattering as light enters and exits objects, which significantly complicates the rendering of such materials. We present two contributions to approximate the successive scattering events at interfaces, due to rough refraction : First, an approximation of the Bidirectional Transmittance Distribution Function (BTDF), using spherical Gaussians, suitable for real-time estimation of environment lighting using pre-convolution; second, a combination of cone tracing and macro-geometry filtering to efficiently integrate the scattered rays at the exiting interface of the object. We demonstrate in I3D paper [24] the quality of our approximation by comparison against stochastic raytracing. This work is illustrated in Figure 5 .

Interactive Indirect Illumination Using Voxel Cone Tracing

Participants : Cyril Crassin, Fabrice Neyret.

|

Indirect illumination is an important element for realistic image synthesis, but its computation is expensive and highly dependent on the complexity of the scene and of the BRDF of the involved surfaces. While off-line computation and pre-baking can be acceptable for some cases, many applications (games, simulators, etc.) require real-time or interactive approaches to evaluate indirect illumination. We present in the Pacific Graphics paper [16] a novel algorithm to compute indirect lighting in real-time that avoids costly precomputation steps and is not restricted to low-frequency illumination. An illustration is given in Figure 6 . It is based on a hierarchical voxel octree representation generated and updated on the fly from a regular scene mesh coupled with an approximate voxel cone tracing that allows for a fast estimation of the visibility and incoming energy. Our approach can manage two light bounces for both Lambertian and glossy materials at interactive framerates (25-70FPS). It exhibits an almost scene-independent performance and can handle complex scenes with dynamic content thanks to an interactive octree-voxelization scheme. In addition, we demonstrate that our voxel cone tracing can be used to efficiently estimate Ambient Occlusion. A primer of this work has been published as a poster (Best Poster Award [22] ). Insights of the method were given in the Siggraph Talk 2011 [23] .

The publication [22] has received the Best Poster Award at I3D'2011.

Fast multi-resolution shading of acquired reflectance using bandwidth prediction

Participants : Mahdi Bagher, Laurent Belcour, Nicolas Holzschuch, Cyril Soler.

|

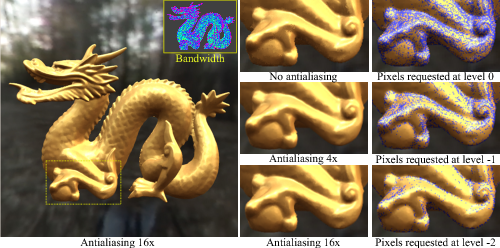

Shading complex materials such as acquired reflectances in multi-light environments is computationally expensive. Estimating the shading integral involves stochastic sampling of the incident illumination independently at several pixels. The number of samples required for this integration varies across the image, depending on an intricate combination of several factors. Ignoring visibility, adaptively distributing computational budget across the pixels for shading is already a challenging problem. In the paper [28] we present a systematic approach to accelerate shading, by rapidly predicting the approximate spatial and angular variation in the local light field arriving at each pixel. Our estimation of variation is in the form of local bandwidth, and accounts for combinations of a variety of factors: the reflectance at the pixel, the nature of the illumination, the local geometry and the camera position relative to the geometry and lighting. An illustration is given in Figure 7 .The speed-up, using our method, is from a combination of two factors. First, rather than shade every pixel, we use this predicted variation to direct computational effort towards regions of the image with high local variation. Second, we use the predicted variance of the shading integrals, to cleverly distribute a fixed total budget of shading samples across the pixels. For example, reflection off specular objects is estimated using fewer samples than off diffuse objects.